Zettelgarden and Retrieval-Augmented Generation

Building retrieval-augmented generation into a personal information management system

Note: the following is a pretty technical discussion about retrieval-augmented generation (RAG) with a zettelkasten. The description of my zettelkasten and Zettelgarden, my app based on my paper card system, might be interesting, but the details of RAG may not be for the general reader.

I have been building a zettelkasten app, which I plan to blog about in more detail at some point. This post is about the zettelkasten process itself, and some recent developments in using retrieval-augmented generation and LLMs to gather insights and make connection. I’m leaving out a lot of the technical details other than at a high level, I will go into more architectural details in a future post.

Zettelkasten

I have been using the zettelkasten technique for a few years. It involves writing individual notes with single, atomic thoughts and storing them in a way that it is easy to find them. I started with an “analog” system, which is lingo for doing it on paper. It started out reasonably well, with cards looking like this:

The top left is a unique ID, which I ended up writing in blue. On the card 1, there is a “#REF2” which is a link to another card. Card REF2 has a link back to, saying [1] - Zettelkasten.

Why a zettelkasten? I was tired of not remembering everything that I was reading, and I was tired of forgetting things that I learned. I read How To Take Smart Notes by Sonke Ahrens which suggested this, then decided to go ahead with it. In theory, each card should be linked to each other card that is related to it. The point of this is that when you’re reading one card, it has references to other cards. These act as reminders, prompting yourself to remember what you’ve already learned.

This is great when you have a few cards, but as I came to learn this is very difficult and scales out of control, both in terms of physical writing, but also in just remembering that there are other things out there.

By September 2023 or so I had about 5000 cards. This is as useful as you might expect:

Not only did writing into the system become difficult as the card links grew exponentially, it became impossible to find anything. I decided to build my own system for this after about a year and a half, which I call Zettelgarden (https://github.com/NickSavage/Zettelgarden). The same card looks like this now:

Along the way, I built uploading files and tasks into it as well, because I wanted a way to keep everything in the same place. I have around 7000 cards now and Zettelgarden is well on its way to becoming something I can start letting others test/use/etc. I tried some of the other notes apps and never found something I really liked, which is why I’ve been building it myself. I spend much of my day in zettelgarden, Taking and reviewing notes. It’s nice to have a demanding user (myself) driving feature development.

This still hasn’t solved my fundamental problem though: If I am adding a card, I need to be able to consider if it is related to any other card. How can I find related cards across the card base when I can’t remember that they are there?

I’ve had a long standing wish since ChatGPT exploded in popularity to bring LLMs to my cards. I experimented early on with sending ChatGPT (well, the OpenAI API) lists of cards and asking it to pick out cards it though were related. This worked reasonably well, but lists of cards take up a lot of tokens. I found it ineffective if the list of cards was more than maybe 100. Chunking this didn’t work well because it was slow. I parked it and continued working on some other things, but came back to it recently.

Retrieval-Augmented Generation

I’ve known about retrieval-augmented generation (RAG) and vector databases for a while, but hadn’t really played with them before. Essentially, you use a specific LLM model to encode text into a vector (bunch of floats basically). You store this somewhere, and query by comparing one vector to another. And that’s basically it. If anything, I was surprised at how little work it was to set up. I’m using the mxbai-embed-large model for embeddings.

First thing I did was build a little proof of concept, something like this:

def get_cards(conn):

"""Retrieve the title and body from the cards table."""

try:

with conn.cursor() as cursor:

cursor.execute("SELECT id, title, body FROM cards;")

records = cursor.fetchall()

return records

except Exception as e:

print(f"Error fetching data: {e}")

return []

def update_card_embedding(conn, id, embedding):

"""Update the embedding column for a specific card."""

try:

with conn.cursor() as cursor:

# Convert the embedding to a PostgreSQL vector string format

embedding_str = '[' + ','.join(map(str, embedding)) + ']'

cursor.execute(

"UPDATE cards SET embedding = %s WHERE id = %s;",

(embedding_str, id)

)

# Commit after updating the batch of embeddings, not after every update

except Exception as e:

print(f"Error updating card {id}: {e}")

def generate_embeddings(text):

"""Generate embeddings by sending text to an API."""

try:

headers = {'Content-Type': 'application/json'}

payload = {

'model': 'mxbai-embed-large',

'prompt': text

}

response = requests.post(EMBEDDING_API_ENDPOINT, json=payload, headers=headers)

if response.status_code == 200:

return response.json().get('embedding') # Ensure this matches your response structure

else:

print(f"Error generating embeddings: {response.status_code} - {response.text}")

return None

except Exception as e:

print(f"Error communicating with the embedding API: {e}")

return NoneI have started with pgvector with my existing postgres database. I used this python script to calculate and store embeddings, then queried it with psql directly.

The proof of concept was a success beyond my imagination. Running it on my card base, it took about 30 minutes to generate all of the embeddings with Ollama running mxbai-embed-large running on a GTX 1660 Super (an old GPU). It was able to provide immediate value by identifying cards that I, as a human, could “oh yeah, I hadn’t thought of those connections before”. It is a little like magic to be honest because while generating embeddings was slow, comparing them is really fast, fast enough to be able to directly embed it into Zettelgarden.

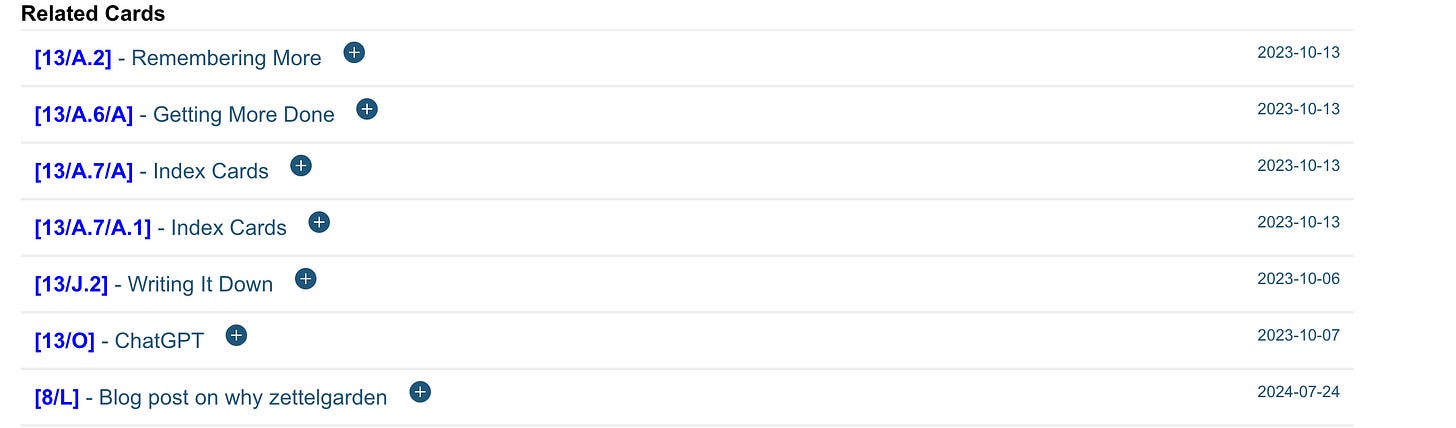

Once I was happy with that, I rewrote this into Go and built it into Zettelgarden directly. It’s now available for me directly on the interface because it is fast enough to generate these on the fly. Here are the results from the zettelkasten card:

Tweaking Results of RAG

Now, of course, the devil is in the details. The results it gives are alright, but not good enough. The above results from the zettelkasten card are fine, but don’t provide as much value as I’d like.

I noticed a number of problems after poking around for a few days. First of all, the card most related to any card is itself. I had to specifically scope it out. Then, children cards are very related to the parent cards. The app already makes it easy to find children of parents, so there is little value in filling related cards with that as well.

Here’s another example of the related cards to the card “Winston Churchill” (keep in mind, these are only based on cards that I’ve included):

It is considering that people are most related to other people, which while reasonable, isn’t really what I want. Here are some examples of cards that exist in the database, using a “classic” search, based on a literal search of title and body contents:

This is a little closer to where I’d like to be with related cards, maybe a combination of the two. Some of these were scoped out, since 1104 is the Churchill Card, but it would be nice to be able to pull out cards like 278/H which talks about Churchill directly

I also tried dumping the transcript of a podcast in to see what would happen, and by default it did a similar thing - it was able to pick up that it was a podcast and therefore related to other podcasts, but nothing about the content itself. Through this, I discovered from first principles chunking, breaking text up into smaller pieces to calculate embeddings for comparison. I’ve built that in now, and it works much better.

You can take a look at Zettelgarden on Github: https://github.com/NickSavage/Zettelgarden.