What I Learned Rebuilding Search Three Times

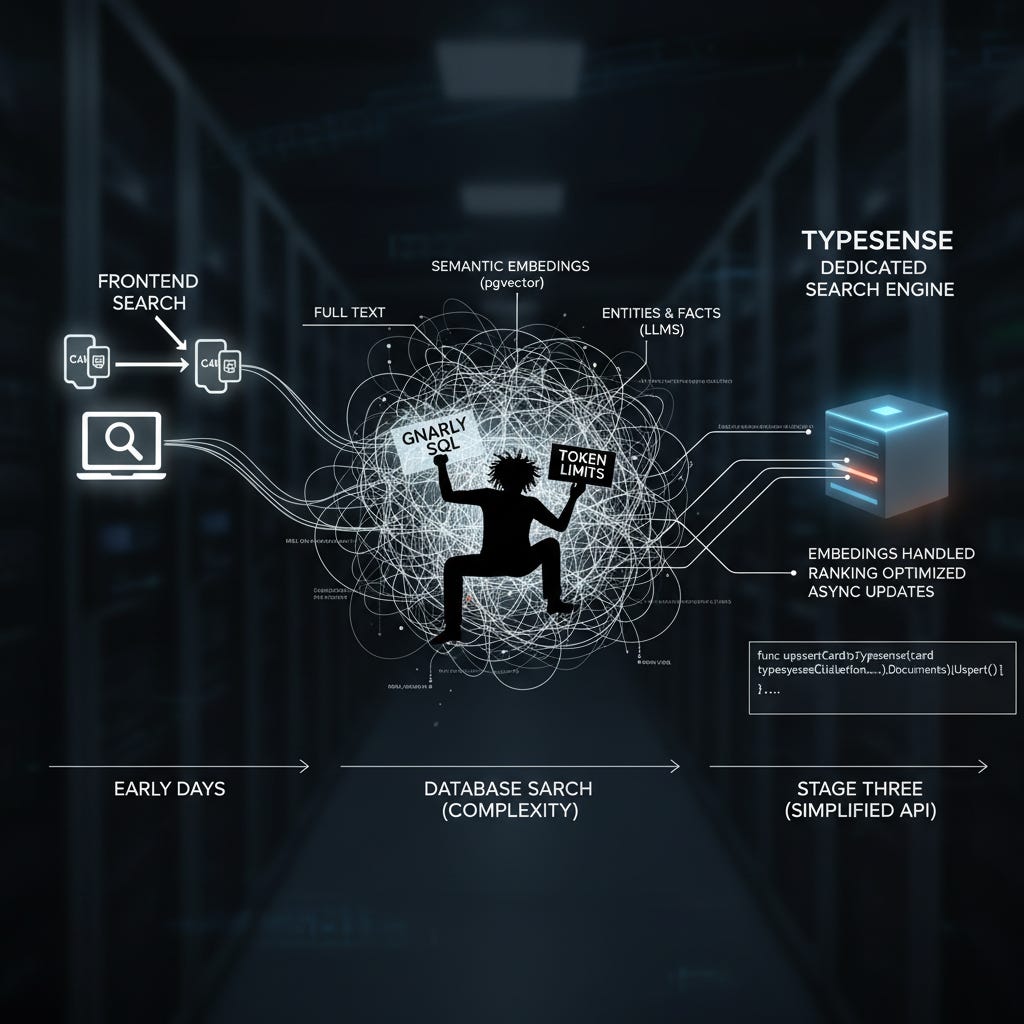

The evolution of Zettelgarden’s search: frontend hacks, brittle SQL, and finally a dedicated engine.

When I started Zettelgarden, I assumed search would be straightforward. But what seemed simple - type text, get results - quickly became one of the hardest parts of the system, forcing me to rethink the architecture more than once.

In this post, I want to walk through the different iterations of search in Zettelgarden as the project has grown, and share some of the lessons I’ve picked up along the way. I’m self-taught and have stumbled into problems first and then learned (the hard way) why those engineering “best practices” exist in the first place.

Early days: Frontend search

Initially, I loaded all cards into memory and filtered them in the frontend. It was blazing fast and trivial to implement. But with even a few thousand cards it choked, especially on mobile.

Database Search

The first scaling step was moving search out of the frontend and into Postgres. Instead of pulling everything down into memory, the app started sending queries directly to the database and returning just the matches. This approach was slower and more complex than in-memory filtering, but it could handle far more data.

It worked, and for a while that was enough. Over time though, I gradually started to bolt on new features:

Full text search: not just titles, but the entire card body.

Semantic search: embeddings stored in the database via

pgvector, which meant adding new database columns and ensuring inserts/updates handled vector generation.Entities and facts: Zettelgarden started using LLMs to extract structured information (people, concepts, facts) from cards and store those alongside them. Suddenly, I no longer had “just cards” to search through, I had three distinct data types, all relevant to a search query.

The last one, facts and entities, dramatically increased the complexity of search. My holds around 60,000 items across cards, entities and facts. Postgres can deal with that amount of data just fine. The real problem is the application code that ties it all together:

Each data type lives in a separate table. Do I try to write one enormous SQL query that joins across everything, or run three separate queries and merge the results? I’ve ended up with gnarly, brittle code to generate the required results.

I’ve been using Cohere’s reranking tools, but have run into token limits. Zettelgarden needs to choose upfront which slice of results went into the reranker, so good results sometimes got cut out entirely.

Switching embedding models means altering column types (

vector(768)tovector(1024)), rewriting migrations, updating ingest code, as well as handling both concurrently to ensure it continued to function.

The database search phase gave me far more power and flexibility than naive frontend filtering, but it also introduced a lot more complexity than I’d bargained for. Each decision on its own felt reasonable (“query the cards”, “add full text search”, etc), but together led to a suboptimal local maxima with complex, hard to change code.

Stage Three: Typesense

After wrestling with increasingly complex Postgres queries and ranking logic, I finally reached for a dedicated search engine: Typesense. It’s an in-memory search engine that’s fast, easy to run, and—most importantly—let me retire a lot of the custom, tangled search code I’d built up over time. Instead of writing elaborate SQL queries and application-side ranking logic, my search logic collapsed down into a set of simple API calls.

This switch brought some big advantages:

Embeddings handled natively: I no longer have to manage embedding generation and storage myself, Typesense does it.

Ranking done right: What had been a mess of SQL queries and reranking steps are now just tuning parameters inside the search engine. I’m still running it through Cohere, but I’d like to retire that

Async-friendly updates: Unlike user-facing queries, pushing or updating documents in Typesense doesn’t need to be synchronous. That gives me flexibility in how I sync data from Postgres to the search engine and hide it from the user.

The tradeoff is obvious: one less mess of SQL, one more moving part. Adding Typesense reduced the complexity of my search logic but increased the complexity of my system. It’s a tradeoff I feel good about - users get much faster and more relevant results, and I don’t have to manage ranking and embeddings myself.

While I need to sprinkle in calls to Typesense throughout, the code to actually work with Typesense is easy to reason with: convert into the schema of a typesense record, call an API function to post it. Comparing this to bashed together SQL strings, this Typesense code will be simpler to maintain over time:

func upsertCardToTypesense(card models.Card) {

record := convertCardToTypesenseRecord(card)

_, err := typesenseClient.Collection(collectionName).

Documents().Upsert(context.Background(), record)

}One downside is that contributors now need Postgres, S3, and Typesense running just to boot the project. Each dependency raises the barrier to entry, which I’m conflicted about.

Conclusions

Zettelgarden’s search has evolved from simple frontend filtering to tangled SQL to a dedicated search engine. Each phase worked until it didn’t, and each tradeoff taught me that building software is really about managing complexity. For me, that meant ripping out hundreds of lines of brittle SQL and replacing them with one API call. Every misstep has been an education: anyone can read about managing complexity, but you only really learn it by crashing your own system and digging your way out.